Background

BrightID is an open network whose server nodes arrive at the same graph state of interconnected users. (This is currently done using a blockchain to share operations that update the graph.) Server nodes then run analysis methods on the graph, each of which assigns verifications of a different verification type to a subset of users. An application can request the set of verifications assigned to one of its users.

Social Behaviors

The graph state is composed of actions recorded by users with reference to one another. The most basic action is a connection which allows one user to express sentiments or facts about another.

One of the most useful sentiments is is this user likely to behave honestly? In other words: is the user I’m connecting to likely to be the only account of a person I know? BrightID should provide tools and procedures to allow users to accurately make this assessment. There will be sybil (duplicate) accounts controlled by malicious users who will make false assessments–and encourage or trick others to do so–in order to help them receive verifications.

Goal

It’s our goal to study social behaviors and provide tools, procedures, and analysis methods to assign verifications in a useful way. Utility is defined by the applications that use BrightID, but in general, it’s useful to limit the number of sybil accounts that receive verifications while enabling honest users to do so. A single application may use multiple verification types to classify its users.

First Attempt

Behaviors

Our first attempt at analysis used a graph that simply recorded mutual connections (did user A connect to user B and vice-versa). We provided a mobile reference application that allowed users to directly share names and photos and store them on each others’ devices. Within the list of stored users that a user had on their device, we allowed a user to flag another as “duplicate,” “fake,” or “deceased.” We captured a timestamp of the last time two users made a mutual connection.

Analysis

We used a modified version of SybilRank called GroupSybilRank that traversed the graph of groups (user-specified clusters of mutually connected users).

Second Attempt

To accommodate the rapid growth of the honey faucet application by 1hive, we created a new procedure and a new verification type that would result in more new users becoming verified. This verification type simply looked at whether or not a user was connected to one of the seed groups created for use by SybilRank and related algorithms.

Behaviors

We directed new users into “verification parties” where they could make mutual connections to other new users and to seed group members.

The Future

Analysis

To defeat graph analysis, sybils are likely to try to increase the number of honest users that consider them to be honest. Conductance (random-walk, power-iteration)-based analysis methods like SybilRank rely on the transitivity of trust relationships.

There are three strategies to defeat a sybil attack.

- Expose sybil-creating activites so that users engaging in them won’t be perceived as honest by honest users.

- Limit the number of sybils that can be verified by a semi-honest group (i.e. some members are perceived as honest by honest users because the sybil-making activites of the group are hidden). Conductance-based methods seek to identify “sparse cuts” between honest and sybil regions.

- Limit the number of connections honest users make to sybils. This is behavioral and related to the first and second strategies.

Behaviors

Recall the important question: Can we provide tools and procedures that allow a user to accurately assess whether another user they come in contact with is likely to behave honestly?

Referencing the strategies identified above, we should provide tools and procedures to let people

- Know a person they’re connecting to is the person they think it is (prevent impersonation).

- Report suspicious activity that might indicate a sybil attack.

- Rate how well they know each other.

- Evaluate each other’s honesty.

Prevent impersonation

Source

Since BrightID doesn’t associate personally-identifiable information with BrightID identifiers, it’s impossible to search for someone using BrightID. Connection requests must come through other communication channels. Users should check the sources from which connection requests originate. The question remains open: is there a way we can help them do this?

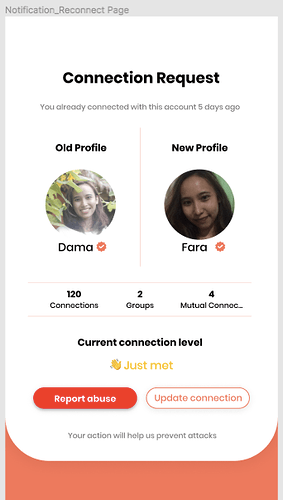

Peer-to-Peer profile sharing

The BrightID reference mobile app requires users to review any profile updates from their connections.

Report suspicious activity

In addition to labeling connections as “fake,” “duplicate,” or “deceased,” we’ve allowed users to label a new connection as “suspicious” and to report a connection link as spam without sharing any information with the attacker.

Let people rate how well they know each other

On the new connection screen we now have three options: “suspicious,” “just met,” or “already know” to describe the connection from the user’s perspective. These can be drilled down further and more information can be added on the profile page for the connection.

Only people with a connection level of at least “already know” are in a position to evaluate each other’s honesty, which is the subject of the next section.

Let people evaluate each other’s honesty

Social proofs allow people to figuratively “compare notes” about someone they know. With zero-knowledge proofs, this can be done in a way that does not publicly expose someone’s BrightID identifier. If someone has chosen “already know” as their connection confidence level with a person p, they can then make sure that everyone else choosing that level is referring to the same p using a proof published to a public profile (such as twitter) that p’s friends would already be aware of. We can help BrightID users easily publish such a proof to a public profile and verify it with their friends at connection-time in a way that can’t be stolen and reused either for impersonation or to prove linkage between a public profile and a BrightID.

The existence of a single public proof per person prevents the small-scale, so-called “split-personality” sybil attack where a person creates multiple accounts and attempts to get each verified through a different circle of friends.

Existing connections need to be notified if a person has changed their single public proof, so they can reconnect with that person to verify it again.

Moving Forward

We are testing connection confidence levels. We will gather information about how they’re being used, and evaluate how many people would would be verified by methods that use them. Applications can switch to those methods when they become acceptable.

Once people are using higher confidence levels, we can enhance them with social proof checks.

In this post, we didn’t discuss how seeds (important for several analysis methods) are selected, but we are improving and will continue to improve that important process.