This is the tool that I created using Nillion:

It is also on Nillion’s showcase page:

We discussed the tool in today’s Aura meeting. In summary, the user flow in the final version of the tool should be like this: (Note: Nillion is used in this flow for storing programs and doing computations, but it can be potentially replaced with another tool)

- The user creates a Nillion key and userId by using Nillion’s MetaMask snap.

- The user verifies their userId with BrightID using blind signature. In this step, either we should only allow people with a certain Aura Player verification level (which means they’re trusted Aura Players) or let any verified BrightID interact with the application while showing their player level alongside their votes

- The user hashes a combination of the known identifiers of the subject (e.g. hashing Discord Id + phone number)

- The user deploys a secret program to Nillion network. This program has N parties and each party submits a string (which is a BrightID here) and for each party, the program determines what percentage of the parties entered the same string as the party. The user receives a programId for the program from the Nillion network, and then submits the hashed identifier and the programId in a storage (e.g. a backend or blockchain) so that the other users that want to find out if the examined subject is a sybil attacker can find the program and submit the BrightID they know of that subject to the program.

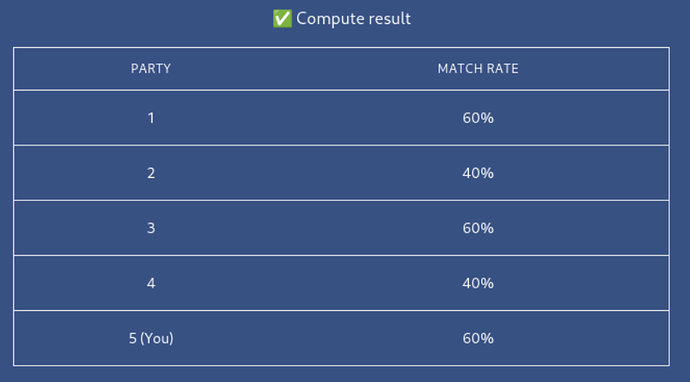

The final output in the current program looks something like this, where in this example the person who is executing the computation can see that 60% of the parties entered the same BrightID as them, while 40% entered another same BrightID. So this subject can potentially be a sybil attacker that used multiple BrightIDs to connect with different people.

I tried to find a solution to make the list of hashed identifiers in the system non-searchable as described in the post, to avoid someone from breaking the system by entering random BrightIDs into programs and marking people as sybil while they aren’t, but gatekeeping the platform to only be used by Aura Players already resolves the issue removes the need for this feature.