Fighting small-scale sybil attacks with known identifiers

In this post, I’ll talk about how we can leverage existing, known, unique identifiers to drastically reduce small scale sybil attacks in a privacy preserving, decentralized way.

Background

BrightID has been effective in defeating large-scale sybil attacks through a simple process of connection parties, but the goal is to reduce even small-scale sybil attacks enough to enable per-person distributions up to a full Universal Basic Income.

BrightID Aura is being developed to become the ideal toolbox for sybil hunters. I’m proposing a new Aura tool for verifiers and elite sybil hunters to achieve BrightID’s goal.

Aura

To receive an Aura verification of uniqueness, a BrightID user must receive a positive honesty rating from one or more Aura players they already know.

Aura players engage in honesty research of BrightID users they know and evaluate the honesty and efficacy of other Aura players.

Small-scale sybil attack recipe

It’s helpful to know what we’re trying to defeat. A small scale sybil attack on the Aura verification system would proceed as follows.

- Create two BrightID accounts.

- Have one or more Aura players verify the first account.

- Have Aura players different than the ones used in step two verify the second account.

Current method for detecting an attack

Aura players should know a user they’re verifying. Ideally, they will have BrightID connections to the closest friends and relatives of the user. An Aura player can ensure that known friends and relatives have connected to the user they’re verifying. If connections are missing, that could be a sign of the type of attack outlined above–where half of the connections are being used by a second account. If the user is honest, they will be able to fix the problem by creating the missing connections. An attacker won’t be able to fix the problem, and will be exposed.

Comparing notes

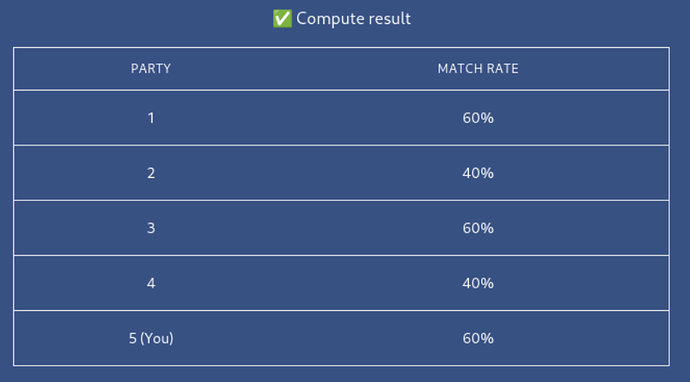

If two Aura players helping to verify the same person already know each other, they can compare their connection to the person being verified to make sure the BrightID matches.

Known identifiers tool

The new tool I’m proposing helps to automate and provide another facet to the process of “comparing notes” (above) and even works in the case that the Aura players helping to verify a person don’t know each other.

User interface

The KI tool has a list of various kinds of unique identifiers. An Aura player enters the ones they already know for the person they’re researching. For example, the player might already know the primary phone number, email address, and twitter account for the person they’re researching.

The player will be informed whether the check has passed or failed for each identifier.

In the case of failure, combinations of identifiers can be checked.

Behind the scenes

Behind the scenes, the KI tool hashes the known identifiers and creates (or joins) a unique communication channel for each hash. These communications channels aren’t searchable–they can only be found by players that already know the identifier being used.

In each channel, the KI tool registers Aura players with new unique identifiers just for that channel. This ensures that Aura players can subscribe to updates in the channel, but don’t learn each others’ identity through this protocol, since this could affect the privacy of the person under research.

A new participant in a channel executes the Socialist Millionaire Protocol with one of the previous participants to prove they’ve selected the same BrightID as everyone else in the channel for the person being researched (without revealing it). This is critical to privacy–otherwise an attacker could pose as a researcher to try to find out which BrightID corresponds to a particular unique identifier such as a phone number or twitter account.

If the protocol fails for any participant, the channel is permanently closed; the identifier under research is marked as failed and can’t be used as evidence for a person’s honesty. All participants in the channel are notified of this outcome. This could be the result of a sybil attack or a griefing attack against an honest BrightID user. The latter case can sometimes be helped by creating identifier combinations that won’t be known to griefers.

The above process is automated and happens without any input from the Aura players, other than entering the known identifiers in the user interface.

The key to preserving privacy is that using the Socialist Millionaire Protocol, we can know whether users are referring to the same BrightID without the possibility of anyone learning which BrightIDs are being checked.

Identifier combinations

If checks on individual unique identifiers fail, they should be combined–first in groups of two and then in groups of three, etc. For example, if a phone number check fails, then a phone number + email check should be tried. A combination check could have a better chance of withstanding a griefing attack. An attacker might grief someone’s twitter account, but they might not also know their phone number, or that the two go together.

Hashing combinations before checking them is especially important to avoid revealing links between identifiers to people who don’t already know them. Hashing is done behind the scenes.

Why this works

Consider the sybil attack recipe above. In each case, the honesty researcher (Aura player) already knows some unique identifiers used by the BrightID user–for example, their phone number, twitter account, primary email address. If a BrightID user uses one BrightID account with one researcher, and a different one with the other, the protocol and thus the check will fail when the BrightIDs are found to not match.

These unique identifiers are never revealed publicly in conjunction with a BrightID and never revealed at all except to people who already know them. Contrast this with government processes and many websites which require people to reveal information about themselves–including unique identifiers such as physical addresses and email addresses–to people they don’t know.